Reeds is a novel dataset for research and development of robot perception algorithms. The design goal of the dataset was to provide the most demanding dataset for perception algorithm benchmarking, both in terms of the involved vehicle motions and the amount of high quality data.

The logging platform consists of an instrumented boat with six high-performance vision sensors, three lidars, a 360° radar, a 360° documentation camera system, and a three-antenna GNSS system, as well as a fibre optic gyro IMU used for ground truth measurements. All sensors are calibrated into a single vehicle frame.

World’s largest dataset for perception

The largest data volume is generated by the six high-performance cameras from the Oryx ORX-10G-71S7-series. Each camera is able to generate up to 0.765 GB/s (3208×2200 maximum resolution in 10-bit depth at 91 fps). As a comparison, the second largest producer of data are the lidars, each writing 2,621,440 points per second corresponding to about 75 MB/s. Each of the two servers connects to three cameras, where recordings are written to a 15.36 TB SSD drive.

Therefore, the total time that the full logging system can be active is around 76 min. Note that a full trip of data correspond to two such disks, resulting in about 30 TB of data.

Fair benchmarking

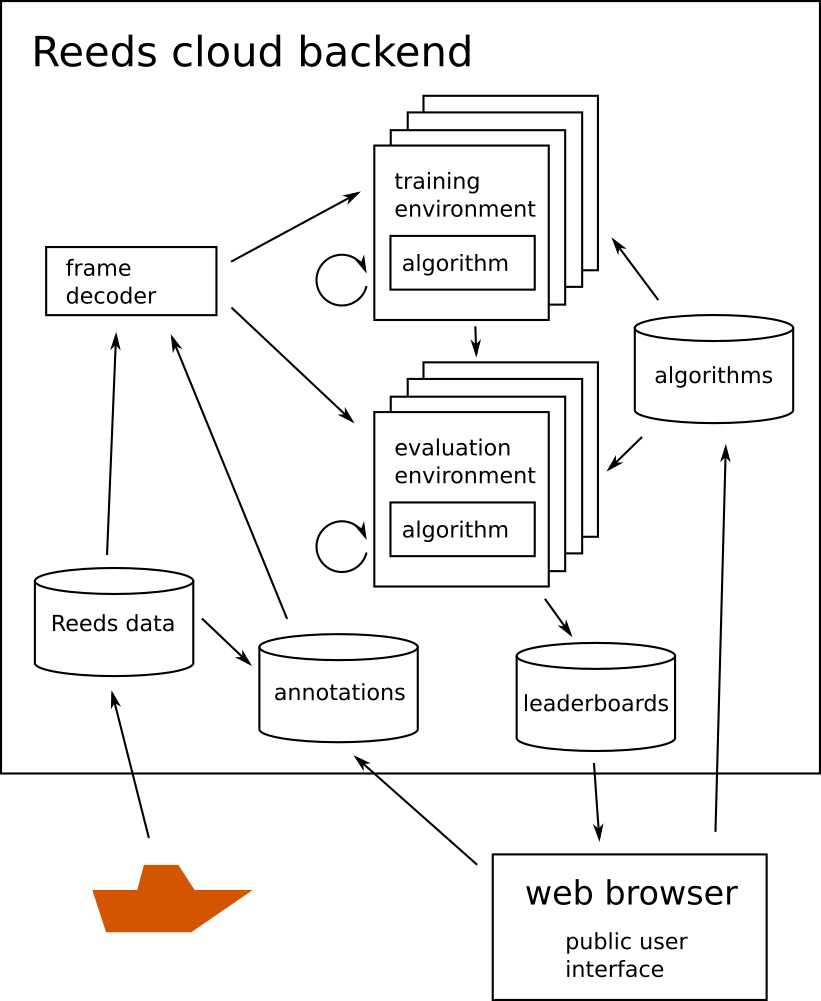

In order to offer fairer benchmarking of algorithms, and to avoid moving large amounts of data, the Reeds dataset comes with an evaluation backend running in the cloud. External researchers upload their algorithms as source code or as binaries, and the evaluation and comparison with other algorithms is then carried out automatically.

The data collection routes were planned for the purpose of supporting stereo vision and depth perception, optic and scene flow, odometry and simultaneous localization and mapping, object classification and tracking, semantic segmentation, and scene and agent prediction.

The automated evaluation measures both algorithm accuracy towards annotated data or ground truth positioning, but also per-frame execution time and feasibility towards formal real-time, as standard deviation of per-frame execution time. In addition, each algorithm is also tested on 12 preset combinations of resolutions and frame rates as individually found in other datasets. From this design, Reeds can be viewed as a superset, as it can mimic the data properties other datasets.